本文最后更新于 704 天前,其中的信息可能已经过时,如有错误请发送邮件到wuxianglongblog@163.com

Theano 实例:更复杂的网络

import theano

import theano.tensor as T

import numpy as np

from load import mnist

from theano.sandbox.rng_mrg import MRG_RandomStreams as RandomStreams

srng = RandomStreams()

def floatX(X):

return np.asarray(X, dtype=theano.config.floatX)Using gpu device 1: Tesla C2075 (CNMeM is disabled)上一节我们用了一个简单的神经网络来训练 MNIST 数据,这次我们使用更复杂的网络来进行训练,同时加入 dropout 机制,防止过拟合。

这里采用比较简单的 dropout 机制,即将输入值按照一定的概率随机置零。

def dropout(X, prob=0.):

if prob > 0:

X *= srng.binomial(X.shape, p=1-prob, dtype = theano.config.floatX)

X /= 1 - prob

return X之前我们采用的的激活函数是 sigmoid,现在我们使用 rectify 激活函数。

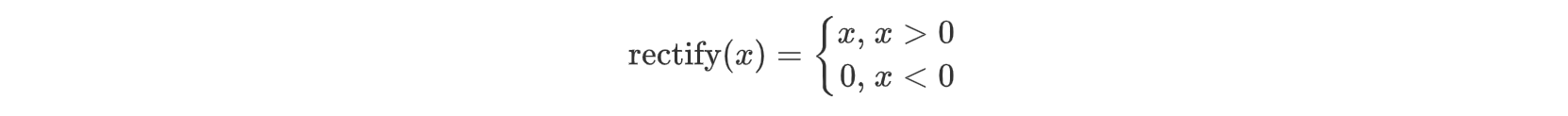

这可以使用 T.nnet.relu(x, alpha=0) 来实现,它本质上相当于:T.switch(x > 0, x, alpha * x),而 rectify 函数的定义为:

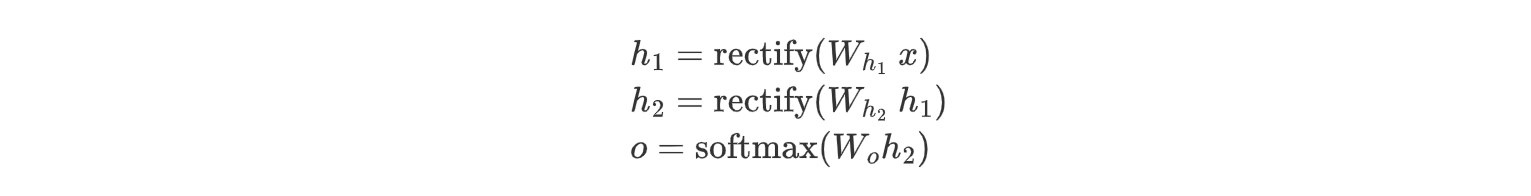

之前我们构造的是一个单隐层的神经网络结构,现在我们构造一个双隐层的结构即“输入-隐层1-隐层2-输出”的全连接结构。

Theano 自带的 T.nnet.softmax() 的 GPU 实现目前似乎有 bug 会导致梯度溢出的问题,因此自定义了 softmax 函数:

def softmax(X):

e_x = T.exp(X - X.max(axis=1).dimshuffle(0, 'x'))

return e_x / e_x.sum(axis=1).dimshuffle(0, 'x')

def model(X, w_h1, w_h2, w_o, p_drop_input, p_drop_hidden):

"""

input:

X: input data

w_h1: weights input layer to hidden layer 1

w_h2: weights hidden layer 1 to hidden layer 2

w_o: weights hidden layer 2 to output layer

p_drop_input: dropout rate for input layer

p_drop_hidden: dropout rate for hidden layer

output:

h1: hidden layer 1

h2: hidden layer 2

py_x: output layer

"""

X = dropout(X, p_drop_input)

h1 = T.nnet.relu(T.dot(X, w_h1))

h1 = dropout(h1, p_drop_hidden)

h2 = T.nnet.relu(T.dot(h1, w_h2))

h2 = dropout(h2, p_drop_hidden)

py_x = softmax(T.dot(h2, w_o))

return h1, h2, py_x随机初始化权重矩阵:

def init_weights(shape):

return theano.shared(floatX(np.random.randn(*shape) * 0.01))

w_h1 = init_weights((784, 625))

w_h2 = init_weights((625, 625))

w_o = init_weights((625, 10))定义变量:

X = T.matrix()

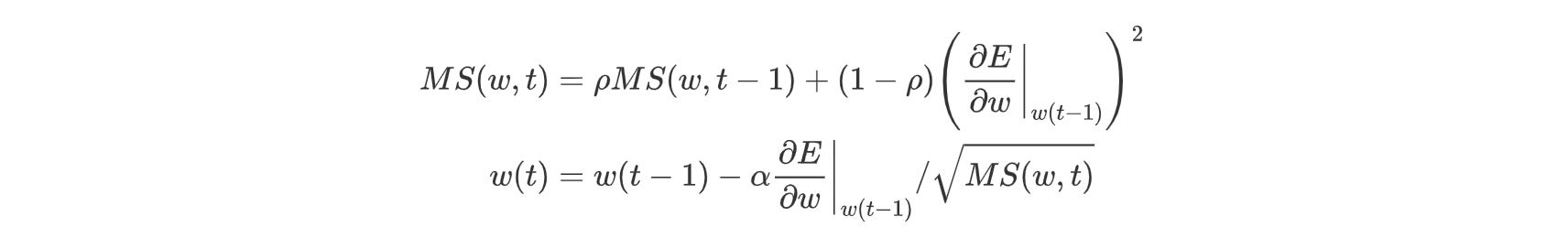

Y = T.matrix()定义更新的规则,之前我们使用的是简单的 SGD,这次我们使用 RMSprop 来更新,其规则为:

def RMSprop(cost, params, accs, lr=0.001, rho=0.9, epsilon=1e-6):

grads = T.grad(cost=cost, wrt=params)

updates = []

for p, g, acc in zip(params, grads, accs):

acc_new = rho * acc + (1 - rho) * g ** 2

gradient_scaling = T.sqrt(acc_new + epsilon)

g = g / gradient_scaling

updates.append((acc, acc_new))

updates.append((p, p - lr * g))

return updates训练函数:

# 有 dropout,用来训练

noise_h1, noise_h2, noise_py_x = model(X, w_h1, w_h2, w_o, 0.2, 0.5)

cost = T.mean(T.nnet.categorical_crossentropy(noise_py_x, Y))

params = [w_h1, w_h2, w_o]

accs = [theano.shared(p.get_value() * 0.) for p in params]

updates = RMSprop(cost, params, accs, lr=0.001)

# 训练函数

train = theano.function(inputs=[X, Y], outputs=cost, updates=updates, allow_input_downcast=True)预测函数:

# 没有 dropout,用来预测

h1, h2, py_x = model(X, w_h1, w_h2, w_o, 0., 0.)

# 预测的结果

y_x = T.argmax(py_x, axis=1)

predict = theano.function(inputs=[X], outputs=y_x, allow_input_downcast=True)训练:

trX, teX, trY, teY = mnist(onehot=True)

for i in range(50):

for start, end in zip(range(0, len(trX), 128), range(128, len(trX), 128)):

cost = train(trX[start:end], trY[start:end])

print "iter {:03d} accuracy:".format(i + 1), np.mean(np.argmax(teY, axis=1) == predict(teX))iter 001 accuracy: 0.943

iter 002 accuracy: 0.9665

iter 003 accuracy: 0.9732

iter 004 accuracy: 0.9763

iter 005 accuracy: 0.9767

iter 006 accuracy: 0.9802

iter 007 accuracy: 0.9795

iter 008 accuracy: 0.979

iter 009 accuracy: 0.9807

iter 010 accuracy: 0.9805

iter 011 accuracy: 0.9824

iter 012 accuracy: 0.9816

iter 013 accuracy: 0.9838

iter 014 accuracy: 0.9846

iter 015 accuracy: 0.983

iter 016 accuracy: 0.9837

iter 017 accuracy: 0.9841

iter 018 accuracy: 0.9837

iter 019 accuracy: 0.9835

iter 020 accuracy: 0.9844

iter 021 accuracy: 0.9837

iter 022 accuracy: 0.9839

iter 023 accuracy: 0.984

iter 024 accuracy: 0.9851

iter 025 accuracy: 0.985

iter 026 accuracy: 0.9847

iter 027 accuracy: 0.9851

iter 028 accuracy: 0.9846

iter 029 accuracy: 0.9846

iter 030 accuracy: 0.9853

iter 031 accuracy: 0.985

iter 032 accuracy: 0.9844

iter 033 accuracy: 0.9849

iter 034 accuracy: 0.9845

iter 035 accuracy: 0.9848

iter 036 accuracy: 0.9868

iter 037 accuracy: 0.9864

iter 038 accuracy: 0.9866

iter 039 accuracy: 0.9859

iter 040 accuracy: 0.9857

iter 041 accuracy: 0.9853

iter 042 accuracy: 0.9855

iter 043 accuracy: 0.9861

iter 044 accuracy: 0.9865

iter 045 accuracy: 0.9872

iter 046 accuracy: 0.9867

iter 047 accuracy: 0.9868

iter 048 accuracy: 0.9863

iter 049 accuracy: 0.9862

iter 050 accuracy: 0.9856